📅 Last updated: March 10, 2025

Hello! Today, we will explore the fundamental differences between concurrency and parallelism with a real-world analogy. Additionally, a FAQ section at the end will cover the most common questions on the topic.

Concurrency vs. Parallelism

It’s fairly common for developers to mix up the concepts of concurrency and parallelism, but they are actually quite distinct. Let’s approach these two concepts using a coffee shop analogy.

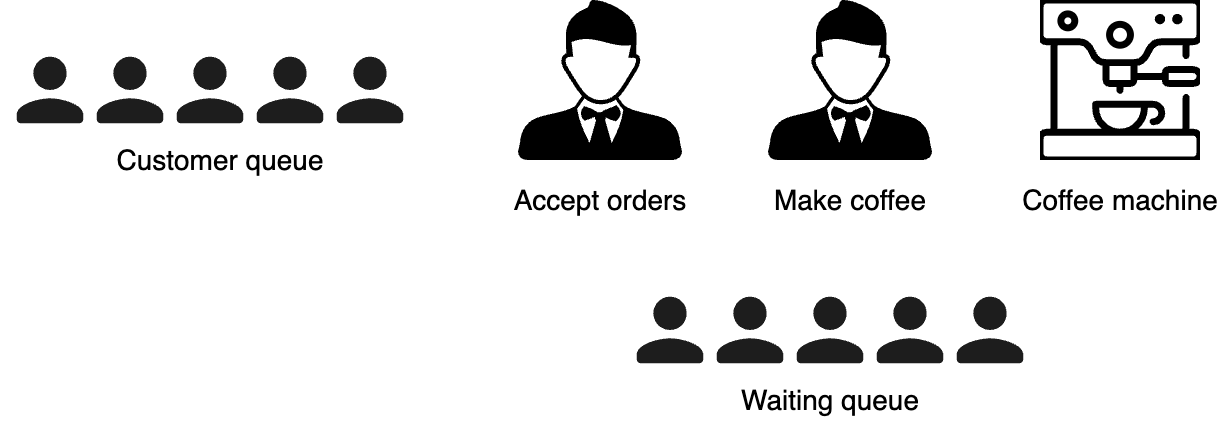

Imagine a coffee shop with one waiter who takes orders and makes coffee using a single machine. Customers wait in line, place their orders, and then wait for their coffee:

If the waiter struggles to keep up with the number of customers, the coffee shop owner may decide to speed up the overall process by engaging a second waiter and buying a second coffee machine. Now, two waiters can serve customers at the same time:

In this setup, the two waiters work independently. Each has its own coffee machine, allowing the shop to serve customers twice as fast. This is parallelism—executing multiple tasks simultaneously by adding more resources (waiters and machines).

If we want to scale further, we can keep adding more waiters and machines, but this approach has its limits. For example, buying multiple coffee machines can become overly expensive.

Instead of just adding more machines, we could rethink how the work is structured. One way to do this is by splitting the tasks between the waiters: one takes orders, and the other makes coffee using a coffee machine. To avoid blocking the customer queue, we introduce a second queue where customers wait for their coffee (similar to Starbucks):

Here, what we have changed is the overall structure; this is concurrency. The tasks are structured to be handled independently, even if they don’t happen at the same time.

In programming, asynchronous execution follows a similar approach: instead of waiting for a slow task to complete (e.g., making coffee), the system can switch to handling another task while waiting (e.g., taking a new order). This improves responsiveness and prevents blocking.

Now, imagine each thread in an application represents a role: one thread for taking orders and another for making coffee. These threads work independently but must coordinate. For example, the ordering threads need to pass information about what to prepare to the coffee-making thread.

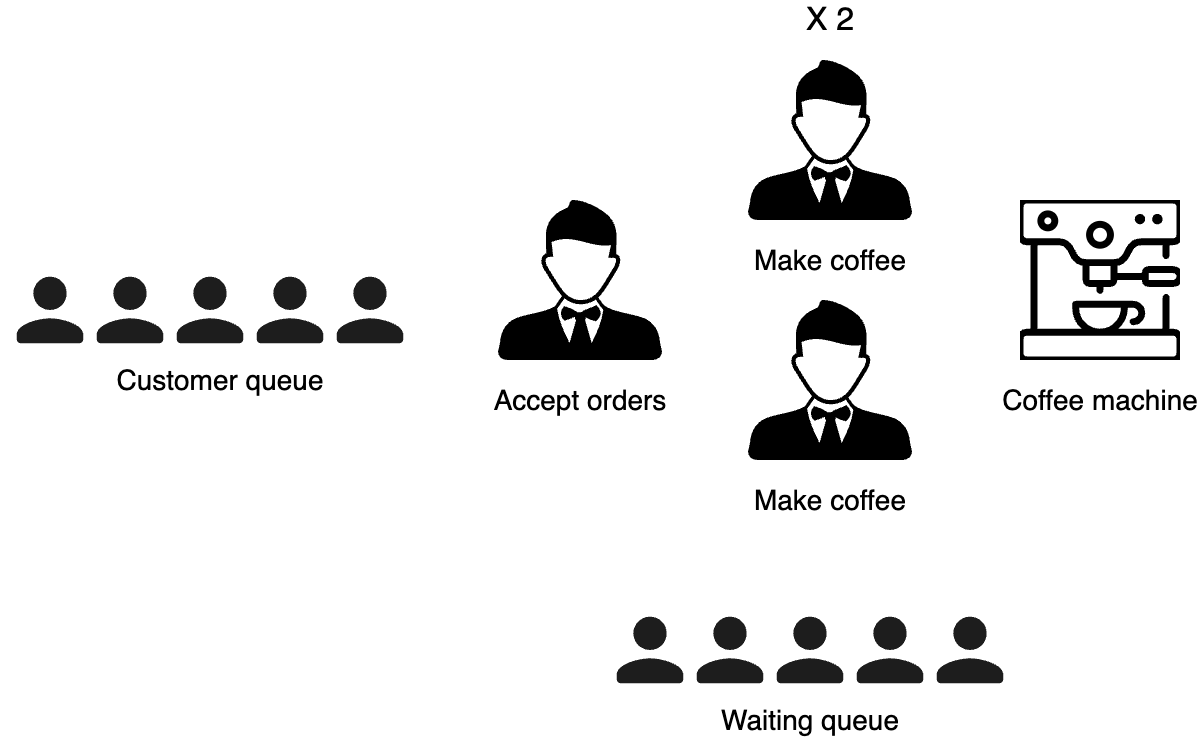

What if we want to increase the shop’s capacity further? Since making coffee takes longer than taking orders, we could hire another waiter specifically to make coffee:

Has the structure been changed? No. It’s still a three-step design:

Accept orders

Make coffee

Serve coffee

However, by adding another waiter just for coffee-making, we introduce parallelism into this specific step without altering the overall concurrency structure.

Remember that while parallelism and concurrency are distinct, they can complement each other. Parallelism can exist without concurrency, but concurrency can enable parallelism as it provides a structure to solve a problem with parts that may be parallelized.

To summarize:

Concurrency is about structure, and we design a solution so that parts can be managed independently. This doesn’t necessarily mean they run at the same time but rather that they are organized in a way that allows for more efficient execution.

Parallelism is about execution, performing multiple tasks simultaneously by adding more threads.

FAQ

What’s the difference between concurrency and parallelism?

Concurrency is about structuring tasks so they can be managed independently, even if they don’t run at the same time. Parallelism is about executing multiple tasks simultaneously by using more resources.

Does parallelism imply concurrency?

No, parallelism does not necessarily imply concurrency. A task can be executed in parallel without being designed to handle its sub-parts independently. For example, processing multiple user requests in parallel doesn’t mean each request is broken into independently managed sub-tasks.

Does concurrency imply parallelism?

No, concurrency does not necessarily imply parallelism. Concurrency allows tasks to be structured to run separately, but they may still execute one after another. For example, coroutines allow non-blocking execution by switching between tasks efficiently, but they don’t necessarily run in parallel.

Where to use concurrency?

Concurrency is useful when structuring a system to handle multiple tasks independently, even if they don’t run at the same time. As discussed, it enables parallelism, allowing us to parallelize some parts while keeping others single-threaded.

Where to use parallelism?

We can use parallelism for heavy CPU-bound tasks, such as data processing, numerical computations, or graphics rendering, to utilize multiple cores efficiently. We can also use it for I/O-bound tasks, such as handling multiple requests or file operations, to maximize throughput.

Where not to use parallelism or concurrency?

Avoid them when tasks are lightweight or require frequent synchronization, as the overhead may outweigh the benefits. Both can also be inefficient for memory-bound tasks, where memory is the bottleneck.

Is multithreading parallelism or concurrency?

Multithreading can represent both parallelism and concurrency.

📚 Resources

More From the Coding Category

Source

Concurrency is not Parallelism by Rob Pike

Hi! Some feedback on formatting: in the Substack App on iOS with dark theme images are not visible (main color of the image is black with transparent background).

I love the use of analogies to explain concepts, keep em coming :)